Video Saliency Prediction Challenge 2024

Video Saliency Prediction Challenge

- To the best of our knowledge, we provide the largest (1500 videos) Video Saliency dataset,

collected with the help of crowdsourcing mouse tracking and validated with conventional eye-tracking - Dataset contains diverse content, high-resolution, audio-visual information, and is available under CC-BY license

- Fixations from > 4000 unique observers and > 50 observers per video

- Evaluating on four metrics: CC, SIM, NSS, AUC Judd

- Participants see scores during the challenge only on 30% of the test set, the final result will not be shown until the end of the challenge

News and Updates

- May 10th, 2024 - Video Saliency Prediction Challenge announced

- June 3th, 2024 - Dataset published

- June 5th, 2024 - Сhallenge has started!

- July 15th, 2024 - Challenge timeline updated

- July 24th, 2024 - Code Sharing phase has started

(if you have not received the link to the form, please contact the organizers) - July 30th, 2024 - Challenge Closing phase deadline updated

- August 5th, 2024 - Preliminary Final Leaderboard has been published; test dataset has been opened

(see “Participate” section) - August 10th, 2024 - After reviewing the appeal one more team has passed the final phase, the leaderboard has been updated

- August 13th, 2024 - Challenge Paper submission deadline updated, all participants are invited!

Task

The task is to develop a Video Saliency Prediction method.

Motivation

Video Saliency Prediction remains a challenging task. However, it must be addressed in order to develop other tasks such as video compression, quality assessment, visual perception study, and the advertising industry. Our competition is aimed at revealing the best model for the Video Saliency Prediction (VSP) task.

Challenge Timeline

- June 5th: Development and Validation phases –– release of training data, opening the validation server

- July

14th24th: Code Sharing phase –– participants should share the working code of methods - July

19th 29th30th: Closing phase –– submissions on the test data and code sharing deadline - July

21st31st: Preliminary test results release to the participants - August

4th11th18th: Paper submission deadline for entries from the challenges - September 29th: Workshop day!

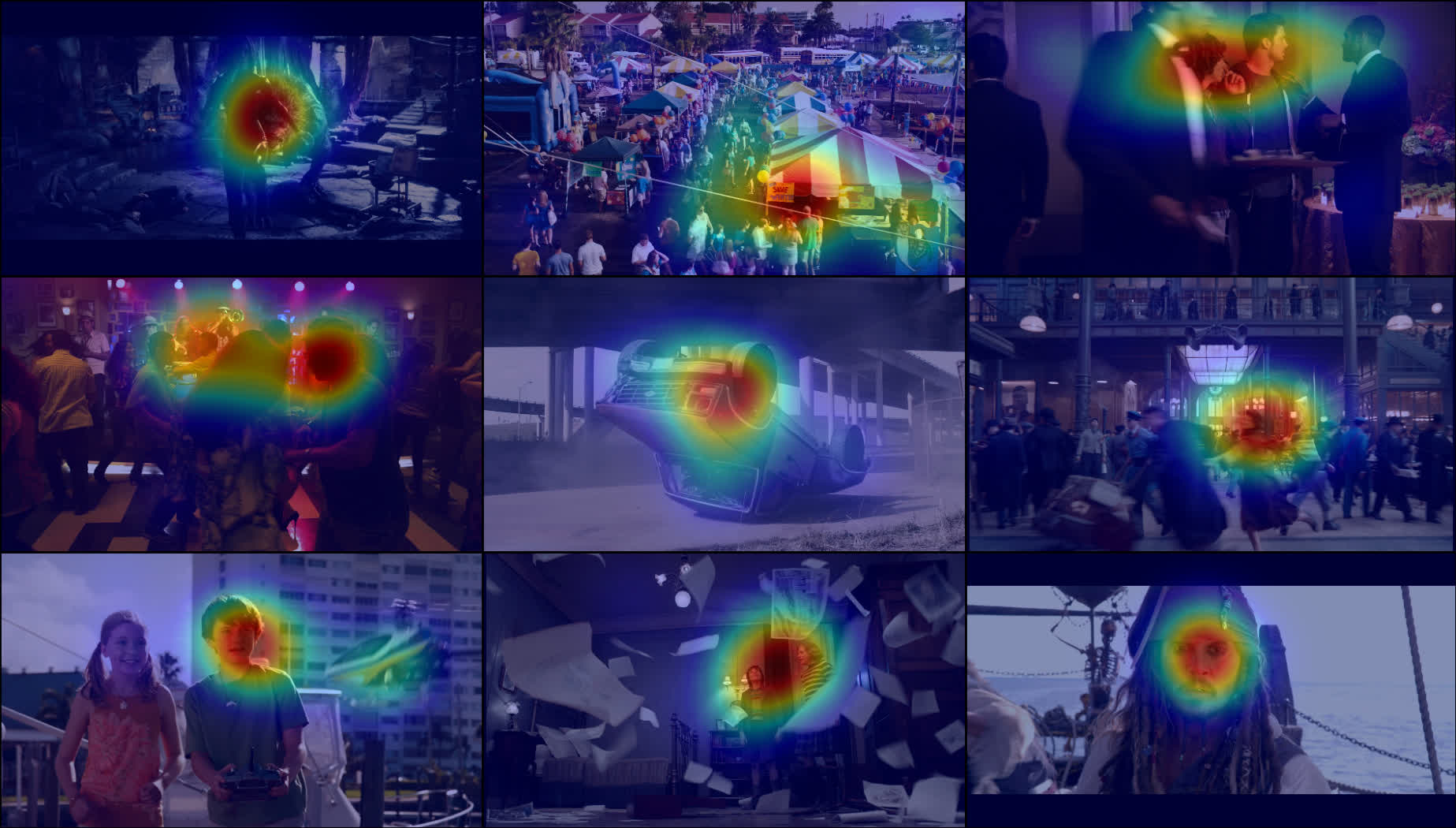

Dataset

Evaluation implementation and dataset description you can find at our github!

We provide a new dataset consisting of Ground-Truth saliency maps for training and evaluating participant’s Video Saliency Prediction methods. Dataset key-features:

- Diverse content: movie fragments, sports streams, live captions, and vertical videos;

- High resolution: 1080p streams;

- Audio information;

- Large size:

- 1500 videos;

- Train/test as 2/1

- Fixations from > 5000 unique observers and > 50 observers per video

- Free license CC-BY

We apply best practices in the crowdsourcing mouse tracking technique to collect such a large-scale amount of saliency data. As proposed in SALICON[1], and Mouse-Tracking[2], each participant sees a blurred screen except for the area around the cursor, motivating him to move to saliency areas. To match one degree of visual angle for blurring saliency maps, we made an ablation study on the average screen sizes and distances to the screen of crowdsourcing participants.

Additionally, we use some checks during crowdsourcing data collection — validation videos (with an obvious saliency map, obtained with an eye-tracker, but far from the center to eliminate center-biased participants), audio captchas (to check that the user has sound device enabled), reaction speed test before the participation, and hardware checks as well (e.g. screen resolution not lower than 1280x720).

We validate the whole pipeline on the open saliency dataset SAVAM[3], collected with professional eye-tracking. Observed metrics largely outperform existing automatic saliency prediction models. We provide code for the generation of saliency maps from fixations and metrics computation.

The dataset will be available as soon as the challenge starts.

Participate

To participate in the challenge, you must register on the “Participate” page. There you can also read about submit format and upload the results of your method. The submission rules are described on the “Terms and Conditions” page.

A leaderboard is automatically built based on the results of metrics testing. You can find it on the “Leaderboard” page.

The results of the challenge will be published at AIM 2024 workshop and in the ECCV 2024 Workshops proceedings. All the participants are invited (not mandatory) to submit their solutions descriptions to the associated AIM workshop at ECCV 2024.

Organizers

-

Alexey Bryncev

-

Andrey Moskalenko

-

Dmitry Vatolin

-

Radu Timofte

If you have any questions, please e-mail video-saliency-challenge-2024@videoprocessing.ai.

Other challenges can be found on the AIM 2024 Page

Citation

@inproceedings{aim2024vsp,

title={AIM 2024 Challenge on Video Saliency Prediction: Methods and Results},

author={ Andrey Moskalenko and Alexey Bryntsev and Dmitriy S Vatolin and Radu Timofte and others},

booktitle={Proceedings of the European Conference on Computer Vision (ECCV) Workshops},

year={2024}

}

- M. Jiang, S. Huang, J. Duan, and Q. Zhao, “SALICON: Saliency in Context,” in The IEEE Conference on Computer Vision and Pattern Recognition (CVPR), 2015

- V. Lyudvichenko, and D. Vatolin, “Predicting video saliency using crowdsourced mouse-tracking data,” CEUR Workshop Proceedings, 2019

- Y. Gitman, M. Erofeev, D. Vatolin, B. Andrey, and F. Alexey, "Semiautomatic visual-attention modeling and its application to video compression," 2014 IEEE International Conference on Image Processing (ICIP), Paris, France, 2014, pp. 1105-1109