Compressed Video Quality Assessment Challenge 2024

Compressed Video Quality Assessment Challenge (CVQAC ECCV-AIM 2024)

- Subjective video quality dataset, collected with the large-scale set of 15+ codecs of multiple encoding presets and 20K subjective comparison participants

- Evaluation using all the famous types of correlation coefficients: SROCC, PLCC, and KROCC

- The final leaderboard will be based on the hidden test set and will not be shown until the end of the challenge

News and Updates

- May 11th, 2024 - Compressed Video Quality Assessment Challenge Announced

- June 4th, 2024 - Сhallenge has started: the CVQAC dataset is published and submissions are being accepted!

- July 15th, 2024 - Challenge timeline updated

- July 25th, 2024 - Code Sharing phase has started

- August 6th, 2024 - Final leaderboard and subjective scores on the test set have been published. (see “Challenge Data” below)

Challenge Report

Paper (arxiv.org): AIM 2024 Challenge on Compressed Video Quality Assessment results

Motivation

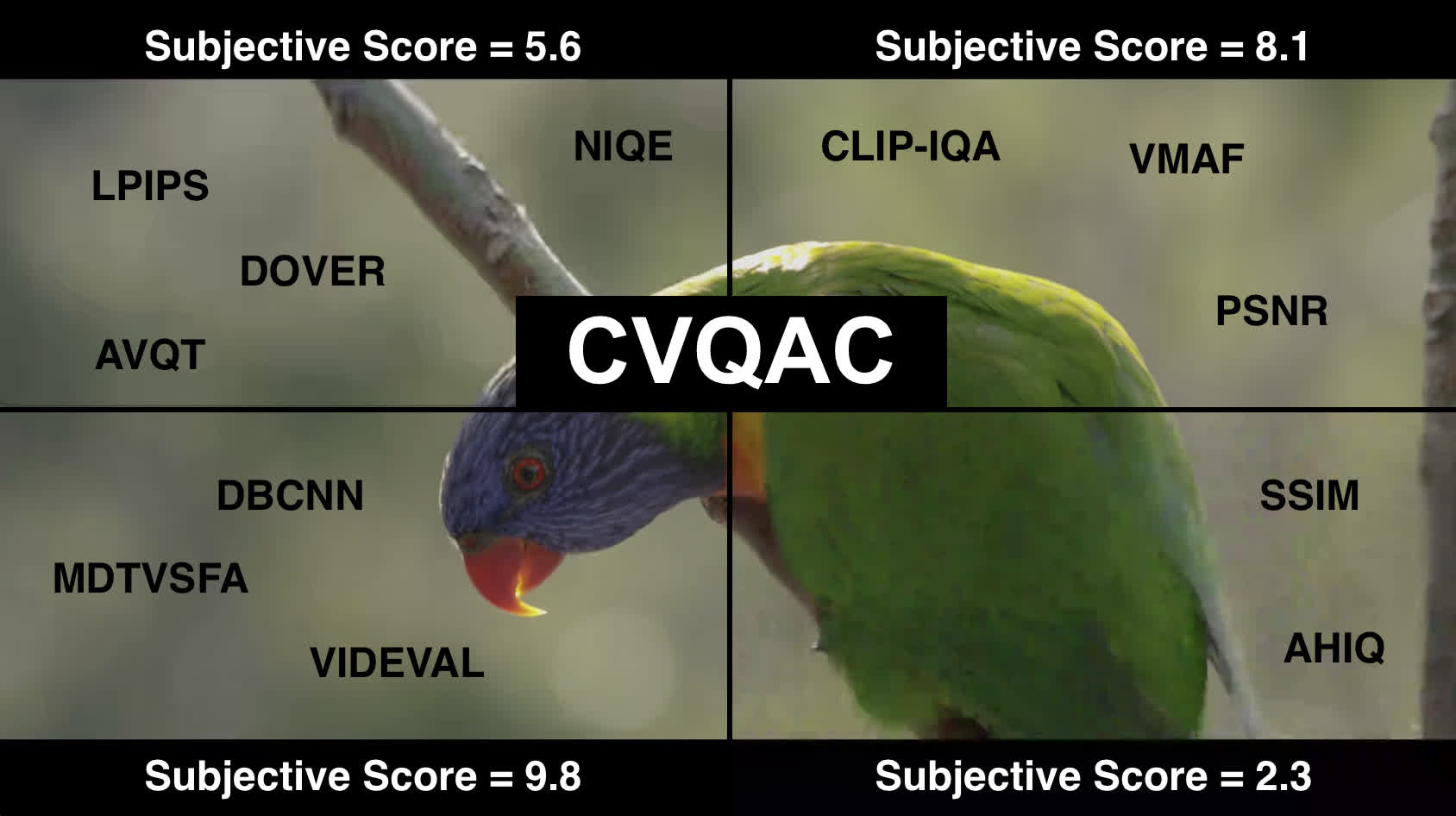

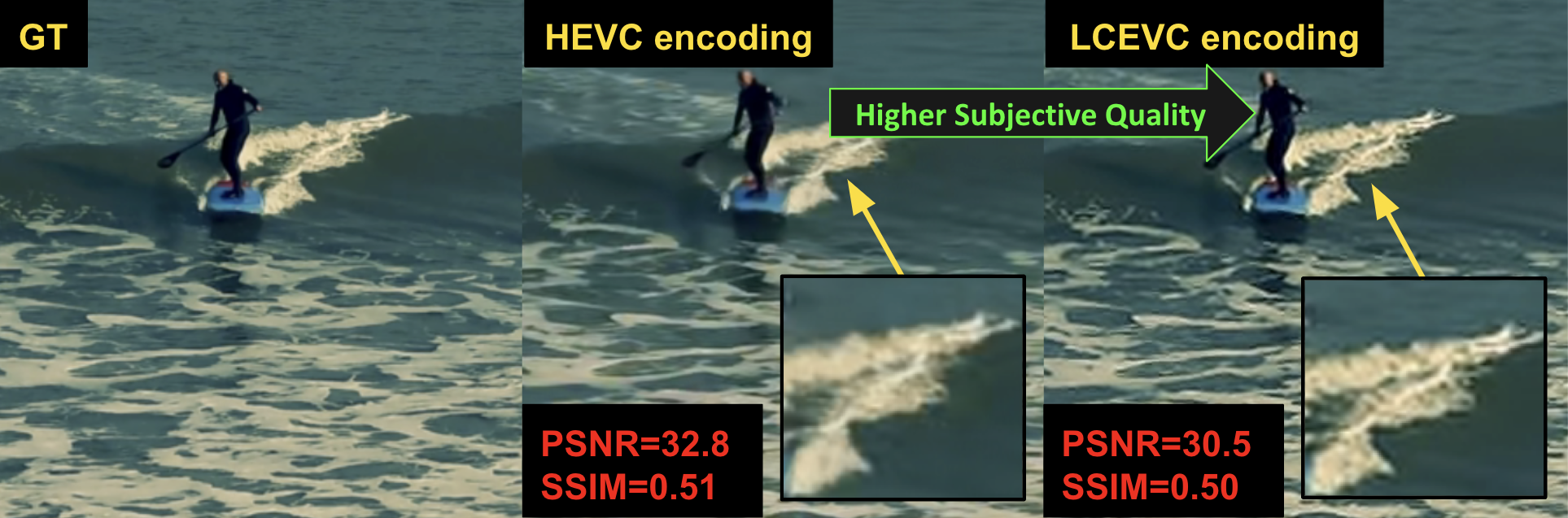

To keep costs low while maintaining high viewership, companies and individuals must find a tradeoff between compession bitrate and encoded video quality. They typically use quality metrics to achieve this, making compressed video quality assessment (CVQA) vital for any platform that offers video-on-demand, conferencing, streaming, or video codec development. However, with the emergence of modern codecs that use deep learning, it is increasingly important to use quality assessment methods that can capture not only common artifacts produced by traditional codecs such as the H.265 standard, but also specific and poorly researched artifacts of contemporary codecs.

This desire defines the goal of our competition: to expand the “Video Quality Metrics” benchmark of general application methods with the powerful methods, designed specifically for the task of Compressed Video Quality Assessment, and also to obtain an overview of current trends.

The objective is to develop a Video or Image Quality Assessment (VQA/IQA) that is resistant to complex compression artifacts. It will be tested not only on different content (including UGC), but primarily on artifacts produced by the range of encoding presests of different compression standards and various speed/quality and bitrate settings.

Our group has over 17 years of experience, and we will do our best to ensure that your participation in the challenge is a pleasant experience. We invite you to contribute to the research on video quality assessment methods and encourage its advancement.

Challenge Timeline

- June 4th: Development and Validation phases –– training and validation data are released, validation server is opened

- July

14th25th: Test phase –– final test data is released - July

19th30th: Closing phase –– submissions on the test data and code sharing deadline -

July 21stAugust 6th: Preliminary test results release to the participants

- August 8th: Challenge paper version verification from teams

- August

4th11th: Challenge paper submission deadline for entries from the challenges

Challenge Data

We provide an extensive dataset, consisting of pristine (reference videos) and their encoded versions, covering various compression artifacts, as well as subjective scores for each video. The training set consists of CVQAD dataset, which is the open part of the MSU Video Quality Metrics Benchmark dataset. The validation and test parts for this challenge were collected separately and were not shared until now.

Dataset key-features:

- 15+ codecs of different compression standards (H.265/HEVC, H.266/VVC, AV1, H.265/AVC, …)

- 40+ diverse fullHD reference scenes, producing around 1500 distorted streams

- 20K participants of subjective evaluation

You can find more information about the full dataset collection in the paper: Video compression dataset and benchmark of learning-based video-quality metrics.

Download Data

Download Challenge Data (Train, Validation, Testing, including GT)

The Entire Dataset with Subjective Scores:

https://drive.google.com/drive/folders/1zzb79MePN9V9HBig0_xcuVIb8pcpSpxP?usp=sharing

Training part:

You can use wget to download the videos (without subjective scores)

wget https://calypso.gml-team.ru:5001/fsdownload/O5NMeQMIQ/ECCV-AIM-CVQAC-videos-train.zip https://calypso.gml-team.ru:5001/fsdownload/O5NMeQMIQ/ECCV-AIM-CVQAC-videos-train.zip

Validation part (public testing):

You can use wget to download the videos

wget https://calypso.gml-team.ru:5001/fsdownload/MT0l4sk1B/ECCV-AIM-CVQAC-videos-val.zip https://calypso.gml-team.ru:5001/fsdownload/MT0l4sk1B/ECCV-AIM-CVQAC-videos-val.zip

Testing part (private testing):

You can use wget to download the videos

wget https://calypso.gml-team.ru:5001/fsdownload/grDBiQJrc/ECCV-AIM-CVQAC-videos-test.zip https://calypso.gml-team.ru:5001/fsdownload/grDBiQJrc/ECCV-AIM-CVQAC-videos-test.zip

Data structure

Subjective_scores_train/validation/test.csv contains subjective scores for each compressed video. Each distorted video besides its subjective quality has the following characteristics:

- codec used for encoding

- encoding preset

- CRF target bitrate

- nickname of the original (pristine) video

Compressed_and_GT_videos contains folders, each of which includes 1 reference video (GT), which is required to test full-reference methods, and many distorted videos (compressed), grouped by encoding preset:

├── <Video name 1>

| ├── <Encoding preset 1>

| | ├── <Codec name 1> _<crf 1>.mp4

| | ├── <Codec name 1> _<crf 2>.mp4

| | ├── <Codec name 2> _<crf 1>.mp4

| | ├── GT.mp4 # reference (pristine) video

├── <Video name 2>

...

Participate

To participate in the challenge, you have to register on the “Participate” page. There you can also read about the submission format and upload your method scores. The submission rules are described on the “Terms and Conditions” page.

A leaderboard is automatically built based on the results of methods testing. You can find it on the “Leaderboard” page.

The results of the challenge will be published at AIM 2024 workshop and in the ECCV 2024 Workshops proceedings. All the participants are invited (not mandatory) to submit their solutions descriptions to the associated AIM workshop at ECCV 2024.

Organizers

-

Aleksandr Gushchin

-

Anastasia Antsiferova

-

Maksim Smirnov

-

Dmitry Vatolin

-

Radu Timofte

If you have any questions, feel free to reach out at compressed-vqa-challenge-2024@videoprocessing.ai

Other challenges can be found on the AIM 2024 Page

Citation

@inproceedings{aim2024cvqa,

title={AIM 2024 Challenge on Compressed Video Quality Assessment: Methods and Results},

author={Maksim Smirnov and Aleksandr Gushchin and Anastasia Antsiferova and Dmitriy S Vatolin and Radu Timofte and others},

booktitle={Proceedings of the European Conference on Computer Vision (ECCV) Workshops},

year={2024}

}